Chapter 10: Lateralization and Language

10.2 Language

Language is one of Homo sapiens’ greatest intellectual evolutionary accomplishments. Using language, we are able to communicate very complex concepts, such as survival instructions (Don’t eat those berries because they taste weird and you’ll get sick) or a shared belief in the existence of complex stories (Hang stockings by the chimney and you’ll get presents if you were good). Language, when used in these ways, has a powerful influence on behavior, and modern humans rely heavily on language in every aspect of society.

Components of language

Speech pathology experts have identified at least four distinct components for describing different aspects of language. The smallest unit is the phoneme, which is an individual sound that generally has no meaning on its own. For example, the word map can be split into three phonemes, “mm”, the short “/ă/”, and “p” sound. The next larger unit of language is the morpheme, which is a combination of phonemes. Morphemes are capable of conveying an idea, such as “cat”. Suffixes such as “-s” and “-ing” also convey ideas (plural and verbs in action, respectively) are also considered morphemes.

The syntax represents the next higher level of language, which is the information conveyed when words are combined in a specific way to produce meaning at the level of phrases and sentences. For example, a statement such as “He gave a gift to his brother” contains syntactic information identical to “He gave his brother a gift”, even though the organization is different. The grammatical rules of many languages tell us the order of nouns, verbs, and objects, and inappropriate deviation from these rules can change the meaning of the sentence dramatically.

Semantics refers to the understanding of meaning, especially the meaning of words in relationship to one another in a phrase, sentence, or paragraph. Extracting meaning from statements not meant to be taken literally (such as a hungry person exclaiming “I’m so hungry, I could eat a horse!”) and identification of the meaning of a word under two different contexts (such as in the sentence “I held a nail between my fingers, but when I swung the hammer, I hit my nail instead.”) fall under the category of semantics.

Brain structures involved with language

Whereas the left and right hemispheres of the brain are mostly symmetrical, one of the biggest asymmetries is related to the structures responsible for language. Myers and Sperry observed that split-brain people can verbally report observations made with the left brain, while having difficulty when information is stored by their right brain. This suggests that the left hemisphere is dominant for language functions. It is estimated that about 90% of right hand- dominant people and about 50% of left hand- dominant people use their left hemisphere for language related functions. However, this does not mean that the other hemisphere does not contribute to language. The right hemisphere, for example, shows activation during the use of nonliteral language, such as in metaphor production or irony comprehension. In addition to the split-brain patient case studies, there are several other significant pieces of evidence to support left hemispheric dominance for language.

https://upload.wikimedia.org/wikipedia/commons/1/11/1-s2.0-S0967586810002766-gr2.jpg

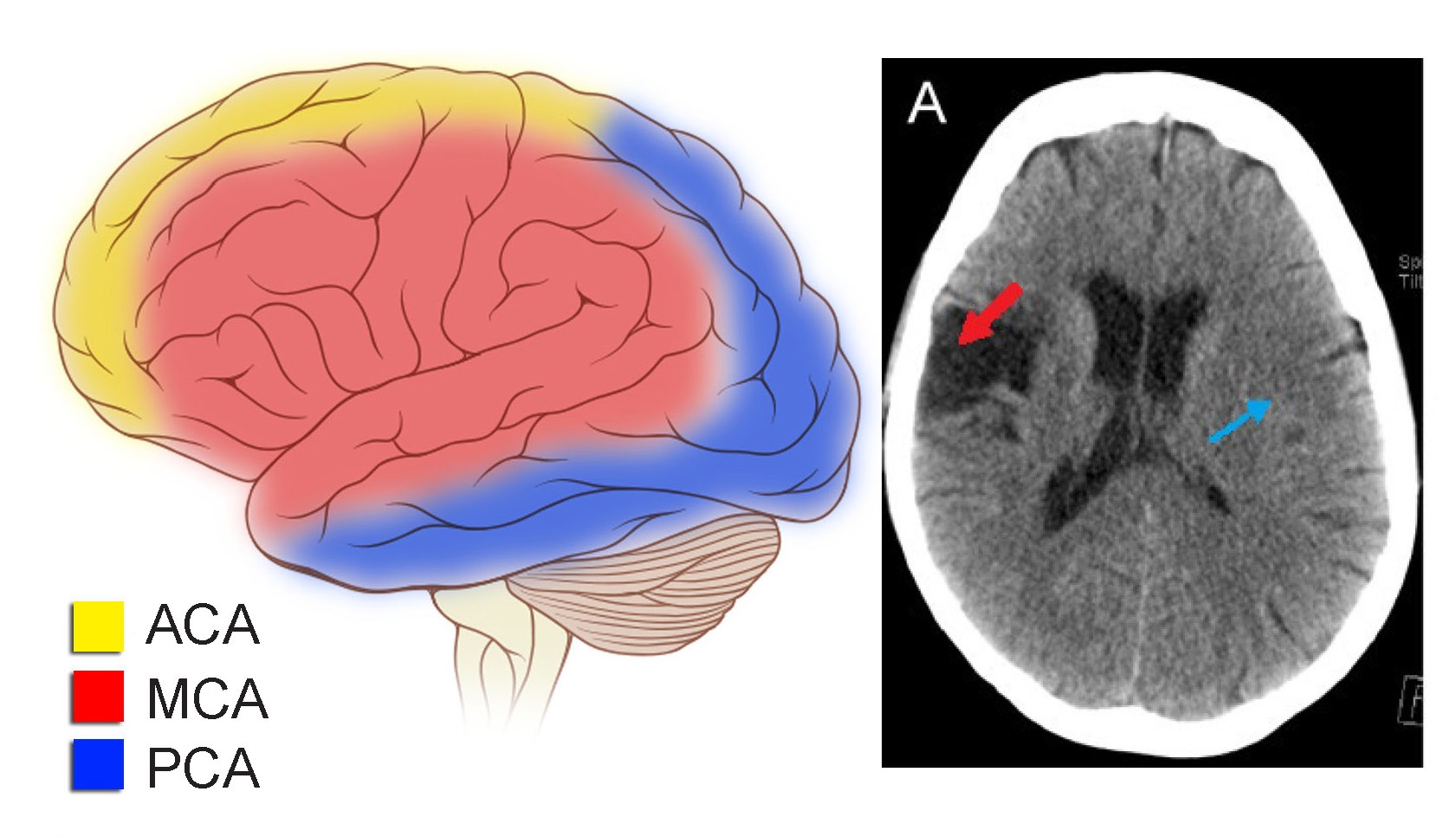

People with left hemisphere lesions may lose their language capacities. A stroke of the left middle cerebral artery often leads to a variety of language related deficits. Unfortunately, similar injuries sometimes happen after brain surgery, traumatic brain injury, or brain infections, also resulting in language deficits when localized to the left hemisphere.

Experimental methods have allowed researchers to study the lateralization of language without causing any permanent damage. The Wada test is the most reliable method by which hemispheric lateralization of language can be determined. Named for the Japanese-born neurosurgeon Jun Wada, the test is a presurgical assessment to minimize the risk of a person losing their language capacity in the process of brain surgery. The protocol begins with the surgical team asking the patient to hold up both hands, wiggling their fingers, while counting. The patient then receives an intravenous infusion of sodium amytal, a GABA receptor positive allosteric modulator that acts as an anesthetic. When infused into the internal carotid artery, the drug gets delivered into just one hemisphere of the brain with little leakage into the other. When the anesthesia perfuses through the left brain, their right hand loses muscle tone and their fingers will stop moving (remember the contralateral organization of the motor control system, chapter 10.) And, if language is lateralized in this hemisphere as it is for most people, they will also be unable to count during this time. Within seconds, the anesthesia is cleared from the brain, and the wiggling and counting resume. If the patient is right hemisphere dominant for language, then they will be able to count, even though the fingers stop moving. The procedure is then repeated while the anesthetic is perfused into the other hemisphere.

The Wada test, because of its invasive nature and occasional side effects (pain, infection, and seizure or stroke in very rare cases), is used less frequently as functional brain imaging methods have become cheaper and more available through the 2000s. The fMRI is a preferred test of hemispheric dominance. To conduct these tests, a person is put into the imaging machine, then asked to perform a series of language tests, such as listing several items of a given category, or listening to a conversation in preparation for follow-up questions. During this process, the fMRI informs the medical team about which half of the brain shows greater activity during the language tests. These behavioral tests have been found to be as accurate as the Wada test in determining lateralization of language functions.

Language Lateralisation Through Laterality Index Deconstruction. Front. Neurol. 10:655. doi: 10.3389/fneur.2019.00655

Across the language-dominant hemisphere, there are a few brain regions that contribute significantly to language functions. When something goes wrong with these areas, a person may develop aphasia, a language disorder. It is estimated that about 180,000 new cases of aphasia are diagnosed in the United States annually. Stroke is a common cause of aphasia, but other neurological insults such as head trauma, traumatic brain injury, or subdural hematoma can induce aphasia. Just like nearly everything in biology, there is a wide range of severity, with some cases being very minor and other cases being much more severe. Speech therapy can help a patient recover from aphasia, and this progressive restoration of function is a demonstration of the brain’s capacity for plasticity and remodeling.

Expressive (or non-fluent; or Broca’s)

One of the first language-related cortical structures to be identified was the posterior inferior frontal gyrus (IFG). Deficits in this area lead to a difficulty with the production of language. In the 1860’s, a patient named Louis Victor Lebourgne had a very unusual condition: he could only speak one syllable. For Lebourgne, the syllable “tan” meant everything, from “yes” to “no” to “hat” to “thirty-four”. Lebourgne would say “tan” while gesturing emphatically, scream “TAN TAN!!” when angry, and whisper “tan” when telling secrets. Because of this, the staff at the hospital called him Patient Tan.

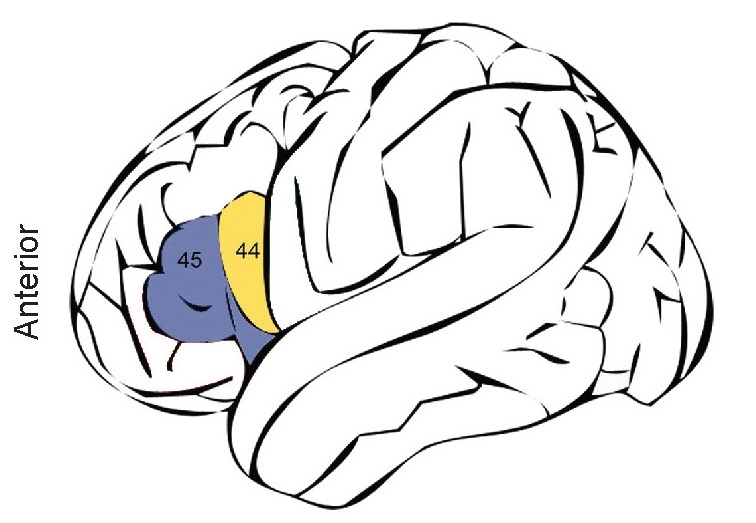

When Patient Tan died, the French physician Paul Broca performed an autopsy on the brain. Broca discovered a huge lesion about the size of a “chicken’s egg” in the left hemisphere, just dorsal of the lateral fissure in the frontal lobe. Soon after, Broca performed autopsies on the brains of seven other patients with similar language difficulties, all with the same prominent injury to this portion of their frontal lobe. Because of the work that Broca did in correlating structure with function, the posterior IFG came to be called Broca’s area (see Figure 10.5).

Today, we understand that a localized injury to the IFG produces a form of aphasia called expressive aphasia (also called non- fluent aphasia or Broca’s aphasia). These patients have difficulty expressing themselves, only speaking in short, effortful phrases, using just nouns and verbs while omitting tenses, conjunctions, and prepositions. They speak haltingly, sometimes filling the silences in their sentences with filler phrases. The patients are profoundly aware of their deficit, leading to overwhelming frustration with their inability to communicate. They know what they want to say, but often can’t get it out. Interestingly, these patients do not have any significant impairment of comprehension.

Patients with IFG injury show similar expressive deficits regardless of the modality of their language. For example, when asked to write, they write slowly, using mostly nouns and verbs. Alternatively, patients who use American Sign Language also lose grammatical syntax and communicate slowly when signing!

Receptive ( or fluent; or Wernicke’s)

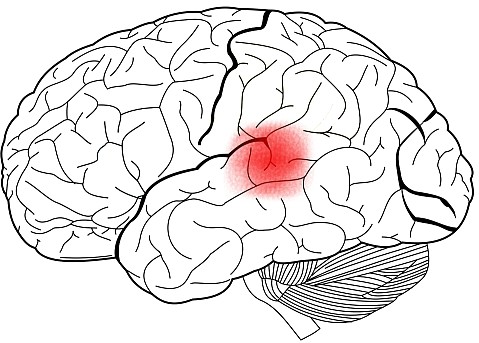

A different brain structure, called the superior temporal gyrus is linked to language comprehension. This area is sometimes also called Wernicke’s area, named for the German physician named Carl Wernicke, who studied a group of patients with a different form of aphasia than Broca’s. These patients had no deficits in the production of speech, but the words they used were very disorganized. They could speak complete sentences fluently, but their speech contained almost no substantial semantic content. Unlike Broca’s patients, Wernicke’s patients had dramatic impairments in comprehension. This language disorder is receptive aphasia (or fluent aphasia, or Wernicke’s aphasia.)

While talking, people with receptive aphasia may create new meaningless words they are unaware of, a symptom called paraphasia. These words could be a mispronunciation of a word, perhaps sounding like the jumbling of syllables. They can happen at the level of the phoneme or morpheme, such as saying nonwords such as “emchurch” or “plehzd”. They also appear at the level of syntax, when a person substitutes a word incorrectly for another, as in the sentence “But I seem to be table you correctly, sir.”

Sometimes, people experience a difficulty with recalling words, a symptom called anomia. This happens in the middle of a sentence, and may be difficult to catch in casual conversation, since they will often use vague language (“stuff” or “things”) or use several words in a roundabout fashion to describe what they are trying to say, a behavior called circumlocution (“red, it’s green, and yellow means be cautious, to keep people safe” instead of “traffic light.”)

Conduction aphasia

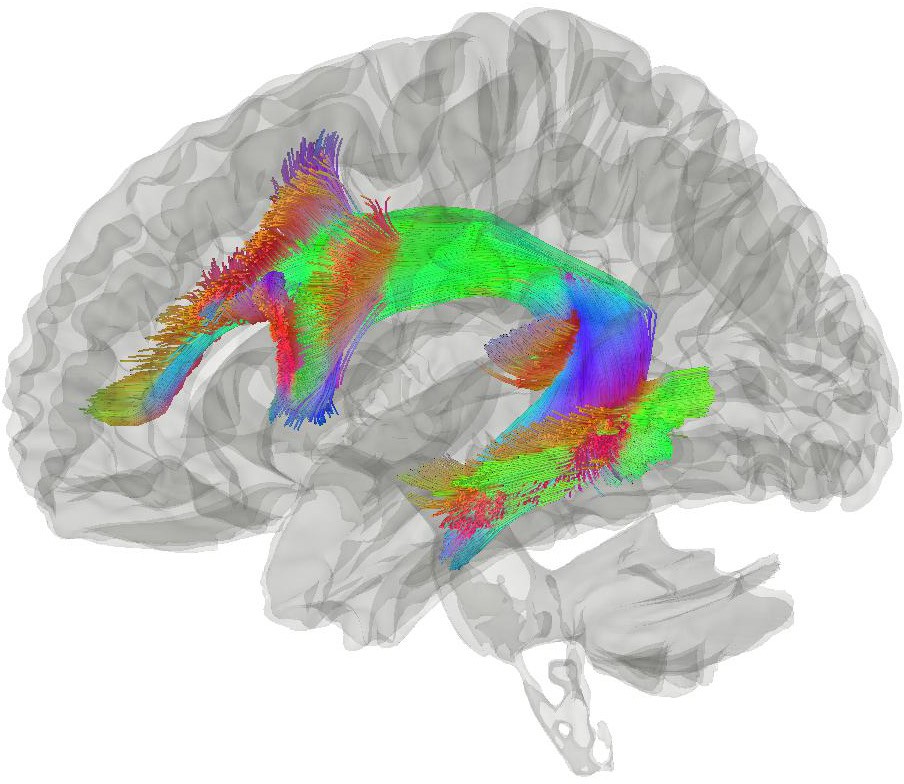

Early theories suggested that communication between the STG and the IFG is important for healthy language production and comprehension. Anatomically, a band of white matter called the arcuate fasciculus spans these areas, originating in STG and terminating in the IFG. When this structure is injured, people develop some difficulty with repeating language they hear, a disorder called conduction aphasia. Generally, these patients display paraphasias when asked to repeat multisyllable words, often switching phonemes around in a single word.

These patients have no significant deficits in language production or comprehension, presumably because their IFG and STG are still intact and healthy. Conduction aphasia is less severe than expressive or receptive aphasia.

Global aphasia

Extensive brain damage to the left IFG, STG, and arcuate fasciculus may cause the most severe form of aphasia, global aphasia. Patients experience both expressive and receptive deficits, usually only being able to communicate using only single words or grunts. They also struggle with repeating words spoken to them. Following a major stroke to the left middle cerebral artery, global aphasia may first present, possibly lessening in severity as the brain heals. If their other hemisphere is spared, patients with global aphasia can learn to communicate using pantomime or facial expressions.

The Wernicke-Geschwind model

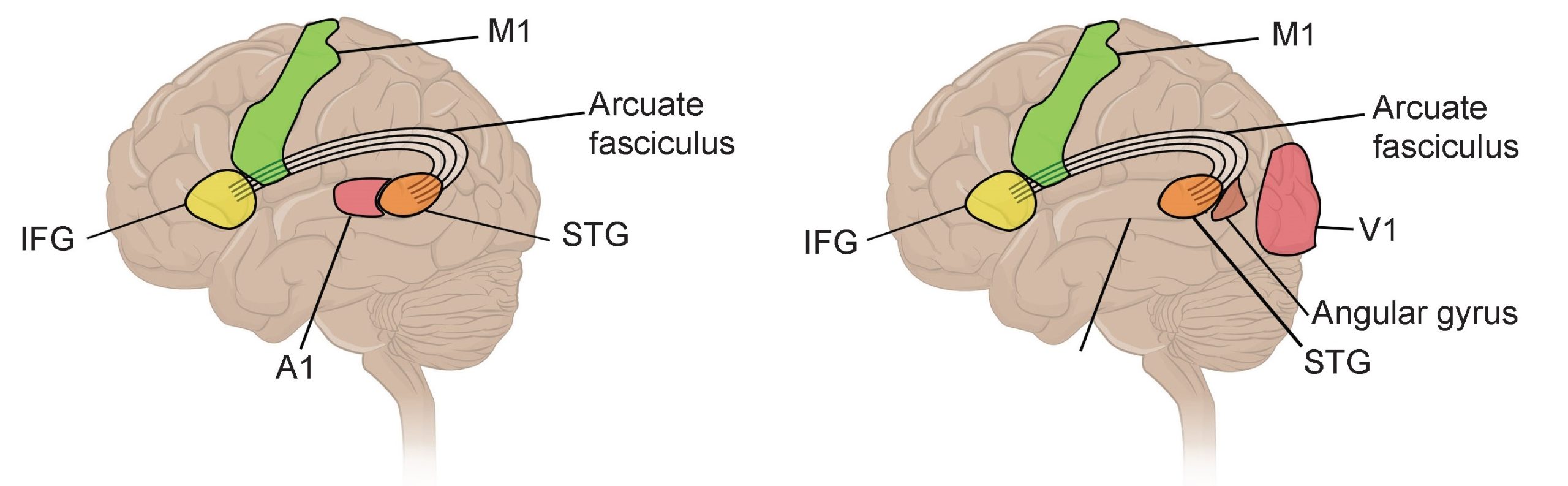

From case studies of injuries leading to aphasia, a few cortical structures emerge as being major contributors to language: the IFG, STG, and the arcuate fasciculus that connects the two. Two neurologists, Carl Wernicke and later Norman Geschwind, proposed the Wernicke-Geschwind model, which suggests that information is passed along through language structures in a linear pathway, and each section is responsible for a different aspect of language.

The model begins with the simple scenario: An interviewer is asking you a question, and you answer. First, the sound information arrives into A1, the primary auditory cortex (see chapter 6 for more details). From there, that information is processed by the STG (Wernicke’s area), which then makes meaning out of those sounds. Then, that information travels across the arcuate fasciculus. Then, that information arrives at the IFG (Broca’s area), where neurons carry information related to the planning of language, such as coordinating the muscle movements that create the verbal response. Finally, those signals arrive at the motor cortex, which is then responsible for sending the descending signals to control the muscles required for speech. Another component of the model proposes an explanation for the following situation: You read a question on a piece of paper, and answer the question verbally. Visual information arrives into the V1, the primary visual cortex. The output of the visual cortices arrives at the angular gyrus, a parietal lobe structure just posterior to the STG. From here, the signal travels through STG and continues through motor cortex, following the same pathway described above.

This Wernicke-Geschwind model (Figure 10.8) was initially helpful for providing a framework for understanding language. But in modern times, we regard it as an oversimplified and outdated explanation of a complex behavior. Sometimes the model fails to accurately predict the nature of a patient’s aphasia even if the locus of a lesion has been precisely identified. Furthermore, some injuries to brain areas outside of those structures identified in the model produce aphasia.

Modern research indicates that language functions are not strictly localized as described by the Wernicke-Geschwind model. Instead, language is such a complex behavior, that the interactions between these areas and more are used in language.

Dyslexia

Affecting an estimated 7-20% of the population, developmental dyslexia is a pronounced difficulty with identification of phonemes in printed words and a related difficulty with reading unfamiliar words. Challenges appear in preschool, when learning to decode phonemes is an expected developmental milestone. These difficulties are not a result of intellectual disability. However, dyslexia is not explicitly a language disorder, since patients generally have no difficulties with comprehension of spoken word.Genetic factors contribute to risk, but a definitive neural mechanism behind dyslexia is still unknown. There are differences in the anatomy and activity of the cerebellum and some atypical lateralization in temporal, parietal, and occipital lobes, suggesting that perhaps some atypical communication from V1 to the language areas of the brain or memory of previously-learned words contribute to symptoms.