Chapter 5: Attention

5.4. Feature Integration Theory

How do we find specific objects in complex scenes? Whether searching for your keys on a cluttered desk or looking for a friend in a crowd, your visual system must efficiently process and filter information. Research on visual search has helped reveal how attention and perception work together in this process, with particularly important contributions coming from Anne Treisman’s Feature Integration Theory (FIT) (Treisman & Gelade, 1980).

Treisman proposed that visual processing occurs in two distinct stages. In the pre-attentive stage, multiple features like color, orientation, and motion are processed automatically and in parallel across the visual field without conscious awareness (Treisman, 1977). When a target has a unique feature, it “pops out” from the background, making detection quick and effortless regardless of how many distractors are present. However, in the focused attention stage, features must be actively combined or “bound” together, requiring conscious attention and leading to slower, serial processing as items are examined one at a time (Treisman & Gelade, 1980).

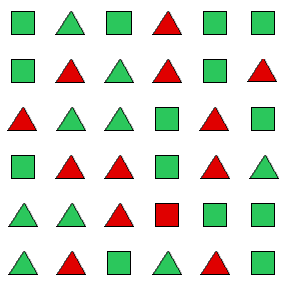

These two stages give rise to different types of visual search. In feature search, where a target differs from distractors by a single feature (such as a red line among blue lines as in Figure 5.1), detection is fast and efficient. Reaction time remains constant regardless of the number of distractors because the search relies on pre-attentive processing. In contrast, conjunction search, where the target is defined by a combination of features (like a red square among red triangles and green squares as in Figure 5.5), is slower and less efficient. Reaction time increases with more distractors because focused attention is required to bind features together (Treisman & Gelade, 1980; Treisman & Schmidt, 1982).

Strong evidence for Feature Integration Theory comes from studies of illusory conjunctions and search performance patterns. When attention is divided or time is limited, people may incorrectly combine features from different objects, suggesting that feature binding requires attention (Treisman & Schmidt, 1982). Additionally, while feature searches show flat reaction times regardless of display size, conjunction searches show increasing reaction times with more distractors, indicating different mechanisms for feature detection versus feature binding (Treisman & Gelade, 1980).

The neural basis for these processes involves the parietal cortex, which plays a crucial role in feature binding and attention. Damage to this area can cause Balint’s syndrome, whose symptoms demonstrate the importance of this region for visual attention and feature integration. Patients with Balint’s syndrome show difficulty shifting attention between objects, problems with conjunction search, and increased occurrence of illusory conjunctions (Kesner, 2012). These clinical findings provide further support for the distinction between pre-attentive feature processing and attention-dependent feature binding proposed by Feature Integration Theory.

Features can also be processed before attention is engaged through what’s known as bottom-up processing, while top-down processing occurs when we actively direct our attention based on our goals or expectations. Understanding this interplay between automatic and controlled processing helps explain how we can both quickly notice salient features in our environment while also being able to engage in more detailed, purposeful visual search when needed (Wolfe & Horowitz, 2017).