Chapter 10: Central Auditory Processing and Hearing Loss

10.5. Sound Location vs Sound Identity

Sound Segregation

It is rare that we hear just one sound at a time. Usually, we’re hearing several things—a bird chirping, a car driving by, a conversation on the sidewalk—and we have to separate them from each other in order to make sense of them. This is called sound segregation (Figure 10.6). Thankfully, we have several tools to help us.

First, there is the primitive auditory stream segregation, where the brain groups the sounds perceptually to form a consistent representation of an object from the sound it makes. A good example of this is when we hear an orchestra. As they play, we separate sounds with similar features (ex. the blare of trumpets) from non-like sounds (ex. the whisper of flutes). Grouping sounds by timbre like the trumpet/flute example is one primitive strategy. Other primitive strategies are grouping by pitch or grouping by location.

The other process we can use to identify the information given from a mixture of sounds is schema-based analysis. A schema is a structure in our brain that holds and organizes the information that we obtain throughout our lifetime. Schema-based strategies are essentially using prior knowledge to locate and understand where sound is coming from. Schema-based analysis is a top-down strategy in which the brain matches the sensory signal from the knowledge stored in the memory. An example of this is when you hear a distinct noise at the park and recognize it as a bird chirping because your schema of what a bird sounds like helps you differentiate the sound of the bird from all the rest of the sounds in the park (Bregman, 1990).

Even with the help of primitive strategies like grouping by similarity and schema-based strategies like recognizing familiar voices, we still have to work to focus on a sound of interest in a complex auditory environment to hear it. Imagine talking to someone in a loud, crowded environment and trying to make out what they are saying. We are able to hear the sound of interest (the other person’s voice) by focusing intently on it. The task of picking out a certain sound in a complex auditory environment is known as the “cocktail party problem” (Cherry, 1953).

Exercises

- The cocktail party problem, where an individual listens to a target sound more while in a noisy environment, works by:

A. making background noise less interesting to the individual listening

B. subconsciously increasing attention to a sound

C. making the individual instinctively leave a noisy environment so that they can listen more clearly

D. subconsciously tuning out background noise

Answer: B

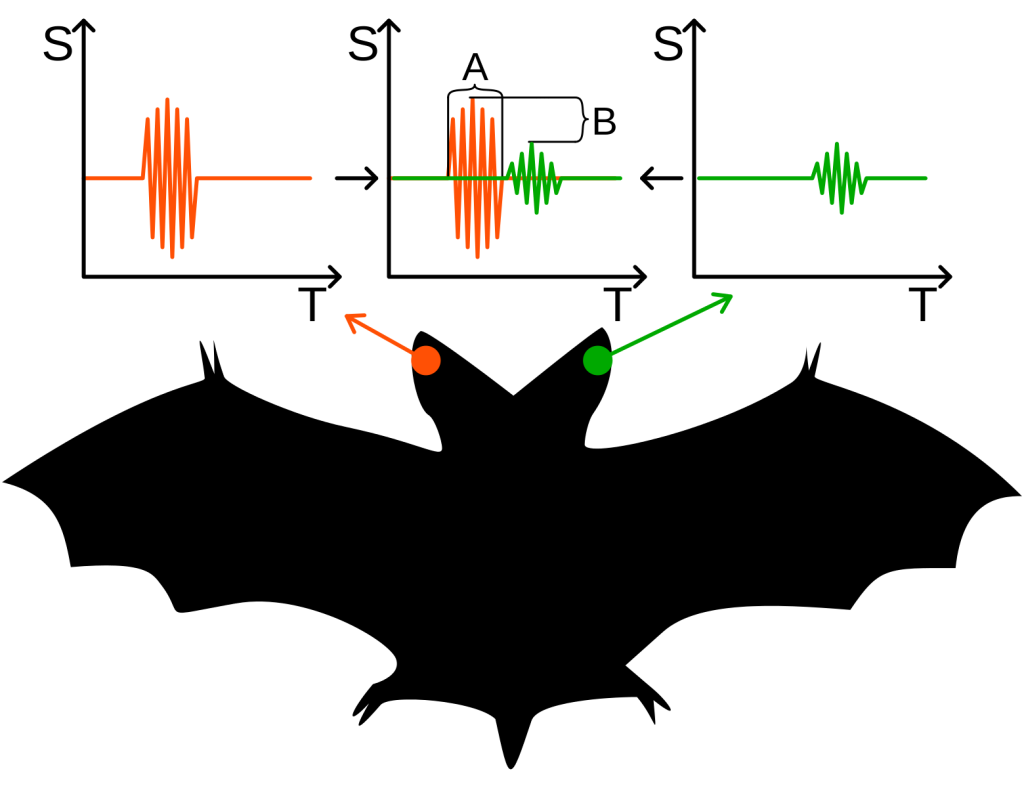

Auditory Localization

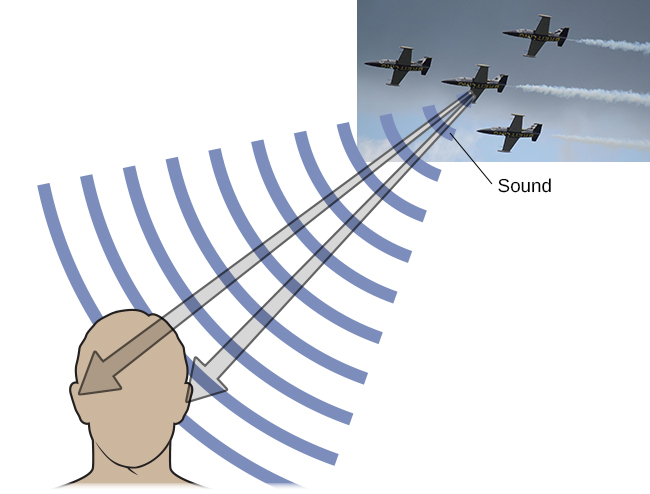

The human brain has to work really hard to make sense out of auditory information that is mixed in with many other sounds at the same time. It requires being able to determine where sound is coming from. This one task is extremely difficult, but also a crucial part of hearing. Without that, humans would likely make many mistakes about the auditory information coming in. Hearing with one ear can provide excellent information about pitch, loudness, and timbre (sound identification information) but information about location is limited. Hearing with two ears improves sound localization because auditory circuits compare information from the two ears (binaural cues) to infer the location of a sound source (Figure 10.7).

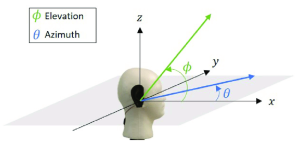

There is a coordinate system that helps humans to localize sound, which includes azimuth, elevation, and distance (Figure 10.8). Azimuth can be explained as the angle to the right or left of what is straight ahead, moving in an arc parallel to the ground. (The median plane is a vertical plane cutting through your head, perpendicular to “straight ahead”.) This helps to identify if sound is coming from the left or right side. Elevation is the exact opposite of azimuth. It is the angle above or below the horizontal plane, which helps to identify if sound is coming from above or below. Distance is the more obvious part of the system. It is how far or near the sound is to a person, which helps to determine the source of the sound as well.

The coordinate system above works together in order for humans to get the most information out of sound. But another central part of spatial hearing is monaural and binaural hearing, which is one-eared versus two-eared hearing. This is a key component in being able to determine where sound is coming from.

Exercises

- What part of the coordinate system helps discern if a sound is to the left, right or middle?

A. Azimuth

B. Elevation

C. Horizontal

D. Distance - What part of the coordinate system helps discern if a sound is coming from above or below?

A. Distance

B. Azimuth

C. Vertical

D. Elevation - What part of the coordinate system deciphers how far or near a sound is?

A. Length

B. Elevation

C. Distance

D. Azimuth

Answer Key:

- A

- D

- C

The head-related transfer function (HRTF)

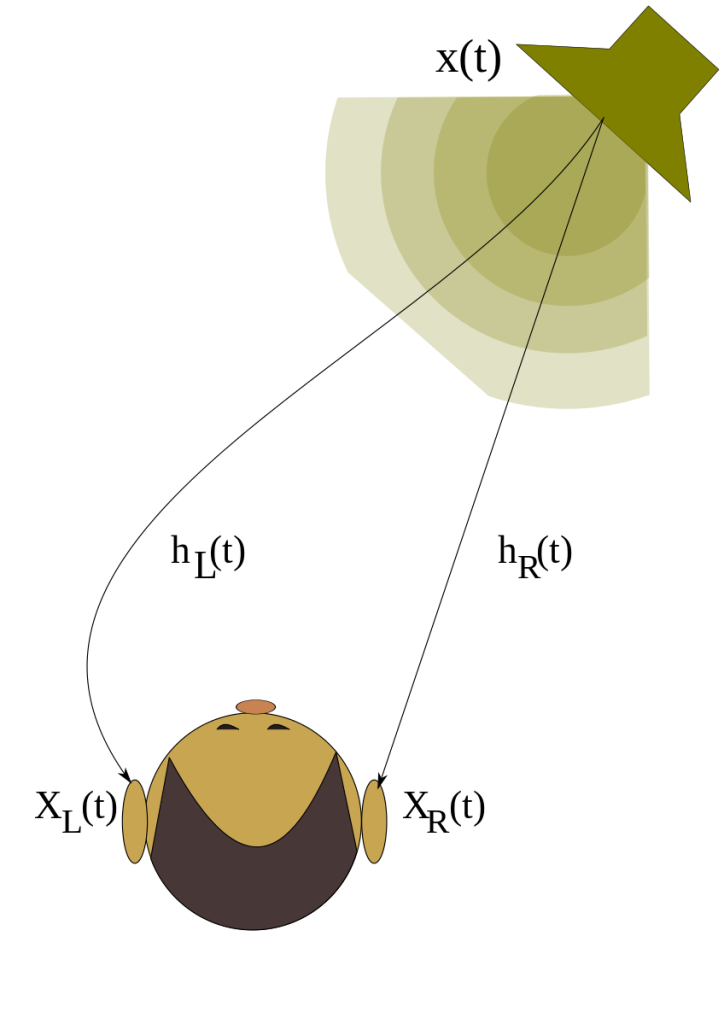

The head-related transfer function (HRTF) is an auditory mechanism for determining the elevation of sound sources. In simpler terms, HRTF helps figure out where sounds come from. This process is monaural, meaning it takes place in one ear at a time, independently (Figure 10.9). The brain also analyzes subtle differences in arrival time and intensity of the sound between the two ears, deducing the elevation of said sound with reasonable accuracy.

Central to the HRTF is the intricate structure of the pinnae, which is the outer visible part of the ear. Every person has their own HRTF because their ears are shaped and sized differently. These anatomical differences change how sounds of different pitches weaken at various elevations. Consequently, the HRTF provides essential cues for accurately perceiving sound elevation.

A seminal study conducted by Hofman et al. (1996) highlighted the HRTF’s adaptability. In their experiment, subjects underwent alterations to the shape of their pinnae, leading to intriguing findings:

- Initially, subjects experienced difficulty determining sound elevation when presented with artificial pinnae.

- Subjects adapted over time and learned to interpret the new HRTF. This shows the brain’s remarkable ability to adjust to changes in sensory input.

- Even after acquiring a new HRTF, subjects were able to revert to their original perceptual framework once the artificial pinnae were removed, highlighting the persistence of their innate HRTF.

An essential concept in auditory perception is the “cone of confusion,” which is an area extending outward from the head where interaural time differences (ITD) and interaural level differences (ILD) are the same in each ear across different sound source locations. This phenomenon makes sound localization difficult, particularly when discerning between front/back and left/right orientations. However, the HRTF is key in resolving such confusions by giving us clues about the height of sounds. Moreover, research suggests that head movements aid in disambiguating sound localization cues and breaking down the cone of confusion.

To summarize, the HRTF, shaped by the unique features of the pinnae, is instrumental in providing elevation cues necessary for accurate sound localization. Its adaptability and role in resolving perceptual confusion emphasize its importance in understanding human auditory perception.

Binaural Cues

If a sound comes from an off-center location, it creates two types of binaural cues: interaural level differences and interaural timing differences. Interaural level difference refers to the fact that a sound coming from the right side of your body is more intense at your right ear than at your left ear, and vice versa for sounds from the left, because of the attenuation of the sound wave as it passes through your head.

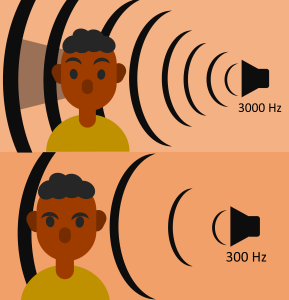

Interaural level difference

Interaural level difference (ILD) is a binaural cue for high-frequency sounds only (see Figure 10.10). High frequency sounds have short wavelengths, so the head casts an acoustic shadow and sounds are quieter in the ear away from the sound. Below about 1000 Hz, there is no ILD because the head is small compared to the wavelength of the air pressure perturbation. The sound sweeps on by without really noticing the head. ILDs can be as big as 20dB for some frequencies; they depend both on frequency and on the direction that sounds are coming from. ILDs are more useful at higher frequencies; ITDs stop being useful at about 1500 Hz.

Interaural level difference refers to the fact that a sound coming from the right side of your body is more intense at your right ear than at your left ear because of the attenuation of the sound wave as it passes through your head. Interaural timing difference refers to the small difference in the time at which a given sound wave arrives at each ear. Certain brain areas monitor these differences to construct where along a horizontal axis a sound originates.

Interaural Time Differences

Interaural time differences (ITD) desribe the fact that a sound source on the left will generate sound that will reach the left ear slightly before it reaches the right ear (see Figure 10.11). Although sound is much slower than light, its speed still means that the time of arrival differences between the two ears is a fraction of a millisecond. The largest ITD we encounter in the real world (when sounds are directly to the left or right of us) are only a little over half a millisecond. With some practice, humans can learn to detect an ITD of between 10 and 20 μs (millionths of a second) (Klump & Eady, 1956).

For the mathematically inclined: If the extra distance to the far ear is 23 cm (0.23 m) across, and sound is traveling at 340 m/s, this timing difference is at most 0.23/340 = 0.000676 seconds—0.676 milliseconds or 686 microseconds. That’s tiny! The smallest difference the ears can notice is 10 microseconds.

When sound waves hit humans ears, action potentials are sent from both ears independently, which is how the time difference is determined. These signals are sent into the brain and first meet in the superior olivary complex, which is incredibly sensitive. Experimental Psychologist and acoustical scientist Lloyd A. Jeffress proposed a theory stating that there are different detectors for particular ITDs in the brain and each is excited maximally when triggered, which leads to the placement of sound. In 1988, the “coincidence counter circuit” predicted by Jeffress was experimentally discovered in the barn owl. However this specific map of coincidence detectors was undetectable in guinea pigs, leading to the conclusion that optimal coding strategies for the time differences depend on head size as well as frequency of sound. In further support of this hypothesis, it was experimentally determined that for humans, whose heads are large enough to homogeneously distributed coincidence detectors, this also depends on frequency. If a sound is above 400 Hz, the distribution is optimal, but if below 400 Hz, the use of distinct sub-populations are optimal (Harper. & McAlpine 2004).

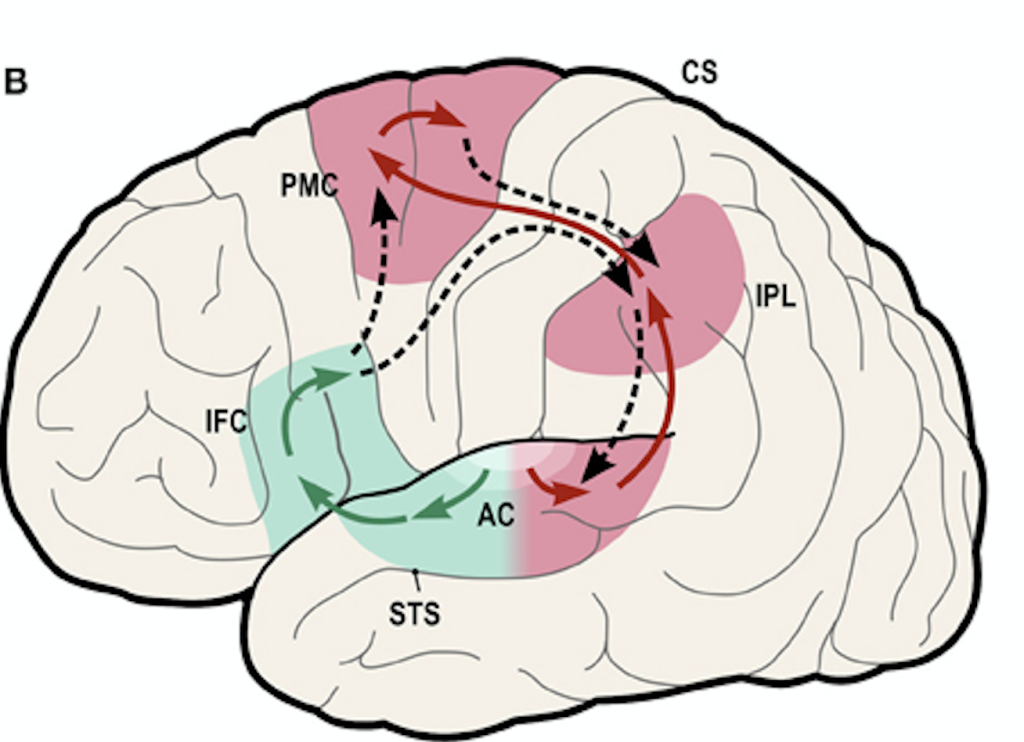

Differentiating between sound location and identity in the brain

Beyond the cortical representation of the A1 (labeled AC in the Figure 10.12) and the belt area lies the “what” and “where” pathways of the ear. Through modern neuroimaging studies as well as lesion studies, we know sound identity is represented in a more ventral extended network whereas sound location is represented in a more dorsal extended network. The following paragraph is from the OpenStax textbook on visual processing, but the general principles apply to auditory processing, as well!

The ventral stream identifies visual stimuli and their significance. Because the ventral stream uses temporal lobe structures, it begins to interact with the non-sensory cortex and may be important in visual stimuli becoming part of memories. The dorsal stream locates objects in space and helps in guiding movements of the body in response to visual inputs. The dorsal stream enters the parietal lobe, where it interacts with somatosensory cortical areas that are important for our perception of the body and its movements. The dorsal stream can then influence frontal lobe activity where motor functions originate.

If you would like to learn more about neuroimaging and lesion studies that provided evidence for the “what” and “where” pathways, here is a link to a scholar-edit article that gives an in-depth overview.

Provided by: Rice University.

Download for free at https://openstax.org/books/anatomy-and-physiology/pages/14-2-central-processing

License: CC Attribution 4.0CC LICENSED CONTENT, SHARED PREVIOUSLY OpenStax, Psychology Chapter 5.4 Hearing

Provided by: Rice University.

Download for free at http://cnx.org/contents/4abf04bf-93a0-45c3-9cbc-2cefd46e68cc@5.103.

License: CC-BY 4.0Cheryl Olman PSY 3031 Detailed Outline

Provided by: University of Minnesota

Download for free at http://vision.psych.umn.edu/users/caolman/courses/PSY3031/

License of original source: CC Attribution 4.0

Adapted by: Chandni Jaspal’

Adapted by: Joseph Hemenway

Adapted by: Jin Yong Lee & Rachel Lam, David Girin

Sound Segregation Authored by: Naomi Beutel ; Provided by: University of Minnesota

Adapted by: Cameron Kennedy & Kori Skrypek

Blauert, J. (1997). Spatial hearing: The psychophysics of human sound localization. MIT press. https://books.google.com/books/about/Spatial_Hearing.html?id=ApMeAQAAIAAJ

Bregman, A. S. (1990). Auditory scene analysis: The perceptual organization of sound. Cambridge, MA: MIT Press

Brungart DS. Informational and energetic masking effects in the perception of two simultaneous talkers. J Acoust Soc Am. 2001 Mar;109(3):1101-9. doi: 10.1121/1.1345696. PMID: 11303924.

Cherry, E. C. (1953). Some experiments on the recognition of speech with one and with two ears. Journal of the Acoustical Society of America, 25, 975-979.

Hofman, P., Van Riswick, J. & Van Opstal, A. Relearning sound localization with new ears. Nat Neurosci 1, 417–421 (1998). https://doi.org/10.1038/1633

Ungerleider, L. G., & Pessoa, L. (2008). What and where pathways. Scholarpedia, 3(11). https://doi.org/10.4249/scholarpedia.5342.

Wightman FL, Kistler DJ. Resolution of front-back ambiguity in spatial hearing by listener and source movement. J Acoust Soc Am. 1999 May;105(5):2841-53. doi: 10.1121/1.426899. PMID: 10335634.