Chapter 3: Visual Pathways

3.3. V1 receptive fields

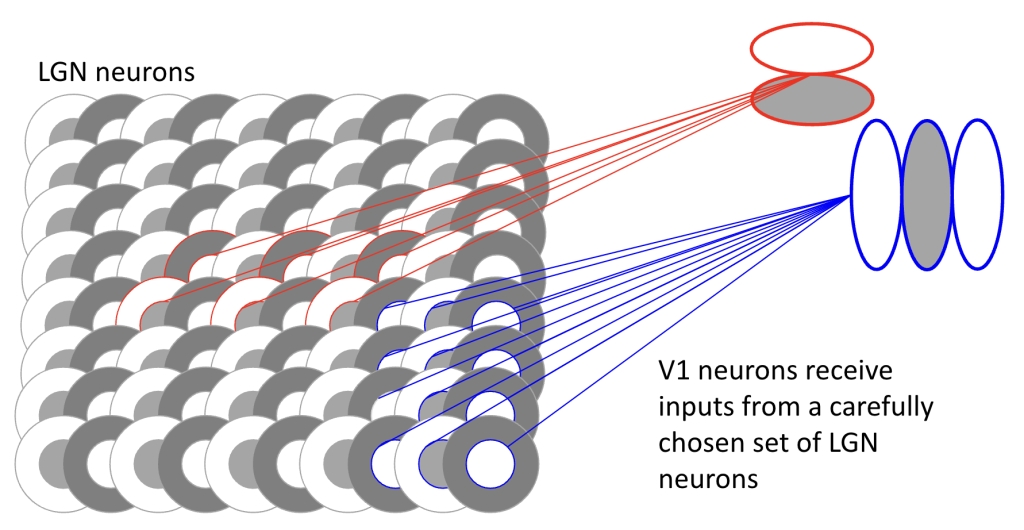

Hubel and Wiesel were studying the V1 in cats in the 1960s discovered that the receptive fields of simple cells in V1 show orientation selectivity. In other words, some neurons in V1 respond preferentially to vertical lines, others respond more to horizontal lines. For any orientation of line – there will be a group of V1 neurons that will respond most to that orientation. Figure 3.4 below has a simplified wiring diagram showing how convergence from LGN neurons onto V1 neurons can create basic orientation-selective units. Near the fovea, the visual space over which V1 neurons pool inputs will be very small; V1 neurons with receptive fields at peripheral locations will sample over a larger pool of LGN inputs.

In addition to orientation selectivity, V1 neurons are also selective for spatial frequency – that is, how fine or coarse a visual pattern is. Just as some V1 neurons respond best to vertical lines and others to horizontal lines, different V1 neurons are tuned to respond best to patterns of particular spatial scales. Some neurons respond maximally to coarse patterns (low spatial frequencies), while others prefer medium or fine detail (medium and high spatial frequencies).

This can be understood through a similar convergence model as shown for orientation selectivity in Figure 3.4. Rather than LGN inputs being arranged to detect edges of particular orientations, the spatial arrangement and pooling of LGN inputs determines what scale of pattern the V1 neuron will respond to best. V1 neurons near the fovea that pool from a small region of visual space tend to be selective for higher spatial frequencies (finer detail), while those sampling from larger peripheral regions often prefer lower spatial frequencies (coarser patterns). This multi-scale processing helps explain why we can perceive both fine details and overall patterns in visual scenes, as different populations of V1 neurons are simultaneously encoding information at different spatial scales. In general, our contrast sensitivity varies with spatial frequency (see Figure 3.5). We need a lot of contrast to see small details (high spatial frequencies) and less contrast to see gross information.

Naturally, V1 neurons do more than just signal the orientation and spatial frequency of local features. When pooling over LGN inputs, a V1 neuron might sample from different color contrasts, so some V1 neurons are selective for color while others are just selective for luminance (brightness).

Like the retina, V1 neural networks are built in layers, with different layers having different computational roles. The cortex is between 2.5 mm and 3 mm thick, and has somewhere between 10,000 and 40,000 neurons per cubic millimeter. So in a ~1 x 1 x 3 mm area of cortex that represents a single location in the visual field (each hemisphere of human V1 typically takes up a surface area of 12-15 cm2 to represent the entire visual field [Benson et al, 2022]), there are tens of thousands of neurons encoding many different aspects of what is going on at that one location in the visual field.

The simplified illustration above illustrates what might be happening in the input layers, which happen to be in the middle of the cortical thickness. Outputs from V1 leave from the superficial layers (away from the white matter, toward the surface of the brain). Neurons in those output layers have another opportunity to mix and match information from the middle layers to create new information. For example, in the middle layers, neurons selectively get information from just one eye or the other. Neurons in superficial layers can combine that information to compute disparity, which is an important clue about how far away an object is from the observer. Disparity as an important visual depth cue will be covered in later sections of this textbook.

One more computation that is known to happen in V1 is direction. A vertical edge formed by the mast of a sailboat, for example, might be moving to the left or the right — not every V1 neuron that is tuned to vertical orientations will respond equally well to either direction (some do — those have a low direction selectivity index, even though they might have a high orientation selectivity index). Direction can be computed either by neurons that sample selectively from LGN neurons with different response speeds at different locations (Chariker et al., 2022), or by using axon length to delay inputs to superficial layers (Conway et al., 2005), or by differences in the excitatory and inhibitory synapses in V1 (Freeman, 2021) — this is an active area of research!

References

Benson, N. C., Yoon, J. M., Forenzo, D., Engel, S. A., Kay, K. N., & Winawer, J. (2022). Variability of the surface area of the V1, V2, and V3 maps in a large sample of human observers. Journal of Neuroscience, 42(46), 8629-8646.

Conway, B. R., Kitaoka, A., Yazdanbakhsh, A., Pack, C. C., & Livingstone, M. S. (2005). Neural basis for a powerful static motion illusion. Journal of Neuroscience, 25(23), 5651-5656.

Chariker, L., Shapley, R., Hawken, M., & Young, L. S. (2022). A computational model of direction selectivity in Macaque V1 cortex based on dynamic differences between ON and OFF pathways. Journal of Neuroscience, 42(16), 3365-3380.

Freeman, A. W. (2021). A model for the origin of motion direction selectivity in visual cortex. Journal of Neuroscience, 41(1), 89-102.