33 5.4. Hearing

Our auditory system converts sound waves, which are changes in air pressure, into meaningful sounds. This translates into our ability to hear the sounds of nature, to appreciate the beauty of music, and to communicate with one another through spoken language. This section will provide an overview of the basic anatomy and function of the auditory system. It will include a discussion of how sensory stimuli are translated into neural impulses, the parts of the brain that process that information, how we perceive pitch, and how we know where sound is coming from.

Anatomy of the Auditory System

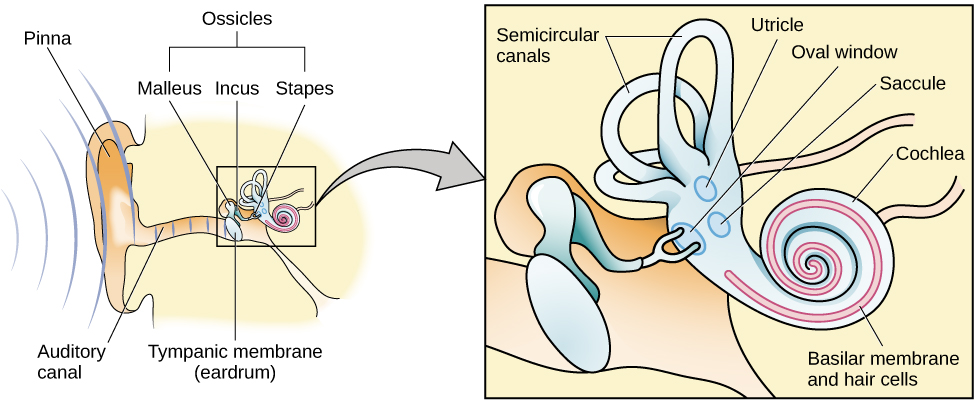

The ear is designed to receive and transmit sound to the receptors deep inside it. It can be separated into three main sections. The outer ear structures include the pinna, which is the visible part of the ear, the auditory canal, and the tympanic membrane or eardrum. The middle ear structures consist of three tiny bones known as the ossicles. The inner ear contains the cochlea, a fluid-filled, snail-shaped structure that contains the sensory receptor cells (hair cells) of the auditory system (Figure 5.20). The vestibular systems is also located in the inner ear, it is involved in balance and movement (the vestibular sense) but not hearing.

Sound waves cause vibration of the air in the auditory canal, when these vibrations reach the tympanic membrane, it too begins to move. It is more difficult for sound to travel through the fluid-filled middle and inner ear. However, the movement of the ossicles amplifies the vibration, which is then passed onto the inner ear through the flexing of the oval window. This then causes the fluid inside the cochlea to move, which in turn moves the hair cells.

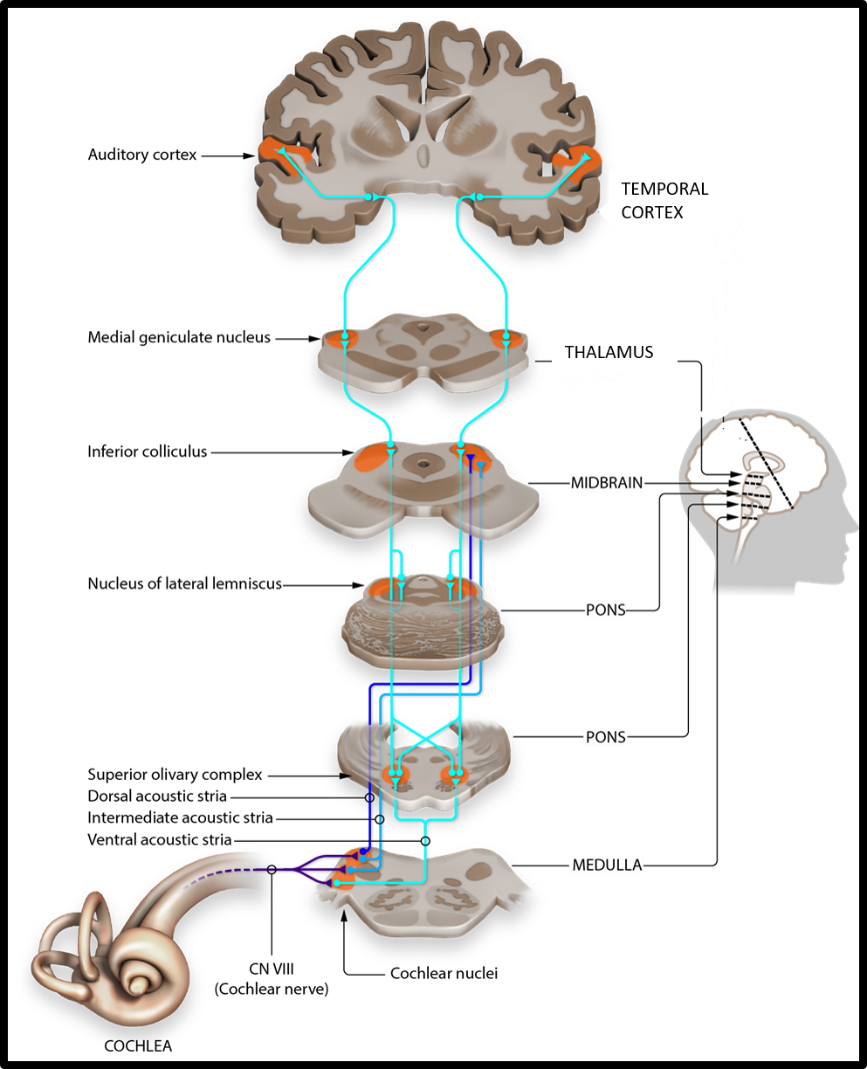

The activation of hair cells is a mechanical process: the physical movement of the hairs on the cell leads to the generation of action potentials (neural impulses) that travel along the auditory nerve to the brain. Auditory information is shuttled to the pons, then the midbrain (inferior colliculus), to the medial geniculate nucleus of the thalamus, and finally to the primary auditory cortex in the temporal lobe of the brain for processing. This information is then passed to other association brain areas (Figure 5.21).

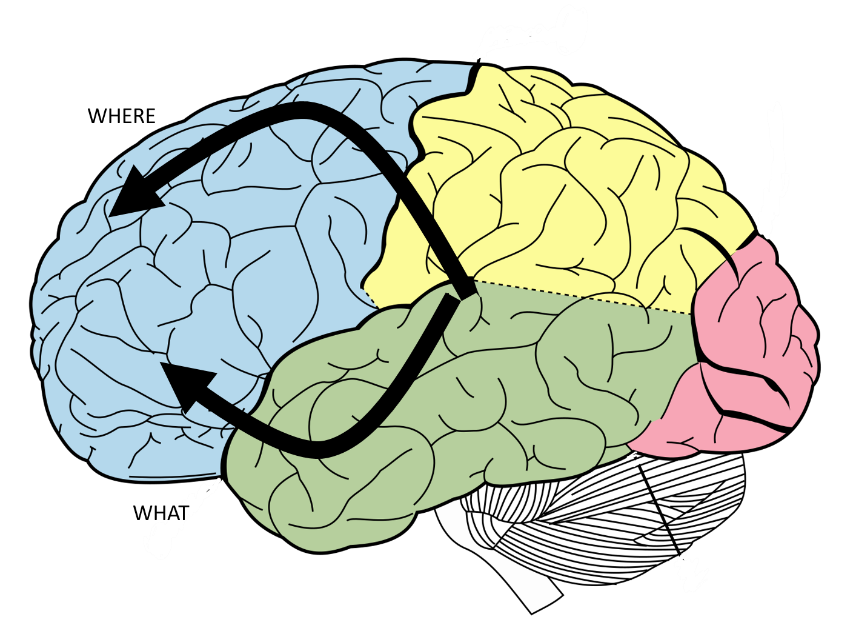

Like the visual system, information about what a sound is and where it is coming from is processed simultaneously in two parallel neural pathways (Rauschecker & Tian, 2000; Renier et al., 2009). Information that helps us to recognize the sound is processed in the “what” pathway, which extends to the cortex via other parts of the temporal lobe to the inferior frontal lobe. Information that helps us to understand where the sound is coming from is processed in the “where” pathway, which extends via the parietal lobe to the superior frontal lobe (see Figure 5.22).

Pitch Perception

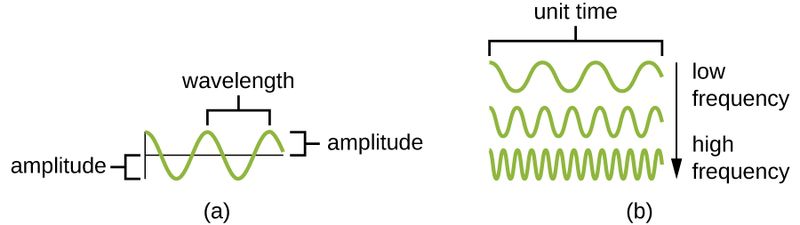

We perceive sound waves of different frequencies as tones of different pitches. Low-frequency sounds are lower pitched, and high-frequency sounds are higher pitched (see Figure 5.23). In other words, if a person has a deep voice, it is because they are producing a lot of low frequency sound waves, but high pitched voices contain sound waves of higher frequency. How does the auditory system differentiate among various pitches?

Several theories have been proposed to account for our ability to translate frequency into pitch. We’ll discuss two of them here: temporal theory and place theory. The temporal theory of pitch perception suggests that a given hair cell produces action potentials at the same frequency as the sound wave. So, a 200 Hz sound will produce 200 action potentials per second. This seems to work for lower frequencies (up to 4,000 vibrations per second). However, we know that neurons need to have a short rest between producing action potentials so this theory cannot explain how we can detect frequencies of up to 20,000 vibrations per second (Shamma, 2001).

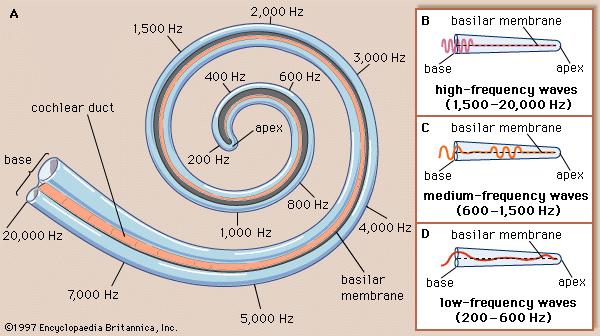

The place theory of pitch perception complements the temporal theory and suggests that auditory receptors in different portions of the cochlea are sensitive to sounds of different frequencies (Figure 5.24). More specifically, hair cells in the base of the cochlea respond best to high frequencies and hair cells in the tip of the cochlea respond best to low frequencies (Shamma, 2001). We can say that the cochlea is arranged tonotopically (according to tone). The primary auditory cortex is also arranged tonotopically.

Sound Localization

The ability to locate sound in our environment is an important part of hearing. Like the monocular and binocular cues that provided information about depth in the visual system, the auditory system uses both monaural (one-eared) and binaural (two-eared) cues to help us to know where sound is coming from.

Sound coming into the ear hits the nooks and crannies in the pinna at different angles depending on its location (above, below in front or behind us). Thus, each sound has a slightly different mixture of frequencies and amplitudes depending on its location (Grothe et al., 2010).

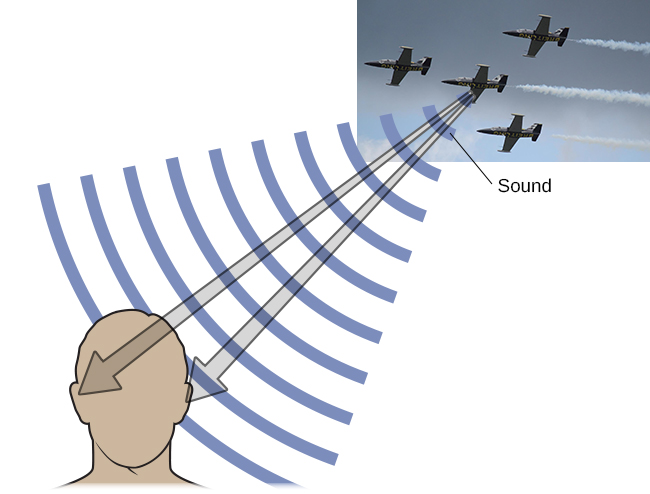

If we have good hearing in both ears, we can also use binaural cues to help localize a sound. Binaural cues are particularly helpful for letting us know whether something is on our right, left, straight ahead, or directly behind us. When sound comes from the side, it reaches the nearest ear first – thus, we have an interaural timing difference. It is also louder in the nearest ear, which we refer to as an interaural level difference (Figure 5.25). Structures in the brainstem and midbrain (Figure 5.21) are important for determining where a sound originates (Grothe et al., 2010).

Hearing Loss

Deafness is the partial or complete inability to hear. There are two main types of deafness. Conductive hearing loss is when sound fails to reach the cochlea. Causes for conductive hearing loss include blockage of the ear canal, a hole in the tympanic membrane, or problems with the ossicles. However, sensorineural hearing loss is the most common form of hearing loss. Sensorineural hearing loss occurs when neural signals from the cochlea fail to be transmitted to the brain, and can be caused by many factors, such as aging, head or acoustic trauma, infections and diseases (such as measles or mumps), medications, environmental effects such as noise exposure (Figure 5.26), and toxins (such as those found in certain solvents and metals). Some people are born without hearing, which is known as congenital deafness. This could be inherited or due to birth complications, prematurity, or infections during pregnancy. Most states mandate that babies have their hearing tested shortly after birth (Penn State Health, 2023). This increases the likelihood that problems can be corrected within the critical period for the development of hearing, which is important for language development.

Conductive hearing loss and sensorineural hearing loss are often helped with hearing aids that amplify the vibrations of incoming sound waves. Some individuals might also be candidates for a cochlear implant as a treatment option. Cochlear implants are electronic devices that consist of a microphone, a speech processor, and an electrode array. The device receives sound waves and directly stimulates the auditory nerve to transmit information to the brain. However, because hearing and language develop relatively early in life, cochlear implants work best for very young children or for people who had normal hearing and language before becoming deaf.

Link to Learning

Watch this video about cochlear implant surgeries to learn more.

Deaf Culture

In the United States and other places around the world, deaf people have their own culture, defined by specific language(s), schools, and customs. In the United States, deaf and hard of hearing individuals often communicate using American Sign Language (ASL); ASL has no verbal component and is based entirely on visual signs, facial expressions, and gestures. Deaf culture particularly values using sign language and creating community among the deaf and hard of hearing. Deaf culture highlights that children who are hard of hearing or deaf often feel isolated, stigmatized and lonely in mainstream education. In contrast, being educated within a school for the deaf fosters a greater sense of belonging (Cripps, 2023). Some members of the deaf community strongly object to the use of cochlear implants and learning how to lip read, arguing that this approach treats deafness as a medical problem that needs to be cured rather than a treasured cultural identity (Cooper, 2019). When a child is diagnosed as deaf or hard of hearing, parents have difficult decisions to make. They have to decide whether to enroll their child in mainstream schools and teach them to verbalize and read lips or send them to a school for deaf children to learn ASL and have significant exposure to deaf culture? How would you feel if you were to face this situation?