Chapter 6. Learning

6.3. Operant Conditioning

The previous section of this chapter focused on the type of associative learning known as classical conditioning. Remember that in classical conditioning, an organism learns to associate new stimuli with other stimuli that produce natural, biological responses such as salivation, fear, or vomiting. The organism does not learn a new behavior, instead, a new signal produces the behavior. Now we turn to a second type of associative learning, operant conditioning. In operant conditioning, organisms learn to associate a behavior with its consequence (Table 6.1). A pleasant consequence makes that behavior more likely to be repeated in the future. For example, if Spirit, a dolphin at the aquarium, does a flip in the air when her trainer blows a whistle, they get a fish. This makes them eager to do it again. The fish reinforces the behavior.

Behaviorist psychologists in the United States, such as Edward Thorndike and B. F. Skinner realized that classical conditioning cannot account for new behaviors, such as riding a bike. So, they went to work on studies centered on operant conditioning. In some of Thorndike’s studies (1911), he would put a cat inside a “puzzle box” to see if they could figure out how to escape. Through trial and error, they eventually pressed the lever that opened the door, allowing them to leave the box to eat a scrap of fish placed just outside. The cat got quicker at opening the door, each time it was put in the box. Thorndike took this as an indication of learning. Observing these changes in the cats’ behavior led Thorndike to develop his Law of Effect—the principle that behaviors that lead to a pleasant outcome in a particular situation are more likely to be repeated in a similar situation, whereas behaviors that produce unpleasant outcomes are less likely to occur again. The Law of Effect works for human behavior too—if you pay someone to work for you, it makes it more likely they will continue to want to work for you.

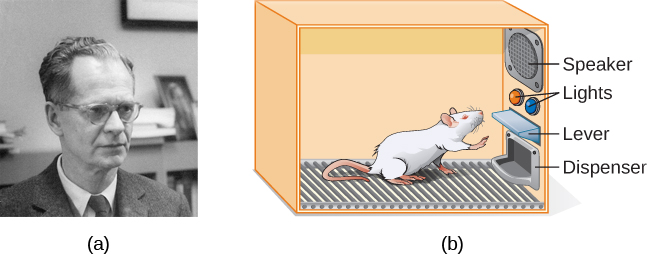

Working with Thorndike’s Law of Effect as his foundation, Skinner also began conducting scientific experiments on animals (mainly rats and pigeons) to determine how they learned through operant conditioning (Skinner, 1938). He placed an animal inside an operant conditioning chamber, which has come to be known as a “Skinner box” (Figure 6.6). A Skinner box contains a lever (for rats) or disk (for pigeons) that the animal can press or peck for a food reward via the dispenser. A person counts the number of responses made by the animal. For example, a rat can be trained to press a bar in response to a flashing light, by rewarding the rat with food.

Link to Learning

Watch this brief video to see Skinner’s interview and a demonstration of operant conditioning of pigeons to learn more.

Psychologists use several everyday words—positive, negative, reinforcement, and punishment—in a specialized manner when describing operant conditioning. This can be confusing at first. In operant conditioning, positive and negative do not mean good and bad. Instead, positive (+) means you are adding something, and negative (-) means you are taking something away. Reinforcement means you are increasing a behavior, and punishment means you are decreasing a behavior. Reinforcement can be positive or negative, and punishment can also be positive or negative. All reinforcers increase the likelihood of a behavioral response. All punishments decrease the likelihood of a behavioral response. Now let’s combine these four terms: positive reinforcement, negative reinforcement, positive punishment, and negative punishment (Table 6.1).

Table 6.1. Positive and Negative Reinforcement and Punishment

|

|

REINFORCEMENT |

PUNISHMENT |

|---|---|---|

|

POSITIVE |

Something is added to increase the likelihood of a behavior. |

Something is added to decrease the likelihood of a behavior. |

|

NEGATIVE |

Something is removed to increase the likelihood of a behavior. |

Something is removed to decrease the likelihood of a behavior. |

Reinforcement

Thorndike and Skinner both found that the most effective way to teach a person or animal a new behavior, is with positive reinforcement. In positive reinforcement, a desirable stimulus is given to increase a behavior. For example, you might tell your five-year-old son, Jerome, that if he cleans up his room, he will get some money. Jerome quickly cleans up his room because he wants the $2 you promised him. The money is a positive reinforcement—something that was given to increase the likelihood of a desired behavior (cleaning up). Rewards for a child might include being read a story, receiving money, toys, a favorite food, or a sticker. You can probably think of many more incentives. Fryer (2010) found that paying children in school districts with below-average reading scores to read books increased their reading comprehension. Specifically, second-grade students in Dallas were paid $2 each time they passed a short quiz about a book they had just read (Fryer, 2010). Fryer found that incentives benefited boys (especially Latinx) more than girls. However, Fryer also found no increase in reading and math test scores among 4th graders in New York when they were paid $5 for completing the test and $25 for a perfect score. What do you think about paying children to do school work? Why do you think the incentives worked for 2nd graders, but not 4th graders?

Early psychologists, such as Pressey (in the 1920s) and Skinner (in the 1950s), created teaching machines that used operant conditioning principles to improve school performance (Ludy, 1988; Skinner, 1961). These machines were early forerunners of computer-assisted learning. In one version of the machine, students were shown a series of multiple choice questions. If they answered a question correctly, a light came on providing immediate positive reinforcement and allowing them to continue to the next question. If they answered incorrectly, nothing happened and they were able to try a different answer. The idea was that students would spend additional time studying the material to increase their chance of being reinforced the next time (Skinner, 1961). This was a way to allow children to learn at their own pace.

Negative reinforcement is another way to increase a behavior, this works by removing an undesirable stimulus. For example, car manufacturers use the principles of negative reinforcement in their seatbelt systems, which beep or ding until you fasten your seatbelt. The annoying sound stops as soon as you exhibit the desired behavior, increasing the likelihood that you will buckle up in the future. The principles of negative reinforcement also determine how likely we are to take an over the counter drug (like aspirin). If it was successful in getting rid of a headache previously, we are more willing to take it again if we have a headache in the future.

Punishment

Many people confuse negative reinforcement with punishment in operant conditioning, but they are two very different mechanisms. Remember that reinforcement, even when it is negative, always increases a behavior. In contrast, punishment always decreases a behavior. In positive punishment, you add an undesirable stimulus to decrease a behavior. An example of positive punishment is scolding a student when they are texting in class. In this case, a stimulus (the reprimand) is added in order to decrease the behavior (texting in class). With negative punishment, you take away a pleasant stimulus to decrease behavior. For example, if a child misbehaves, a caregiver might confiscate a favorite toy for a period of time. In this case, a stimulus (the toy) is removed in order to decrease the behavior.

Punishment, especially when it is immediate, can decrease undesirable behavior, especially in the short term. Positive punishments include getting a speeding ticket for driving too fast, or getting blasted by a car horn when stepping into the road without looking. Spanking is another example of positive punishment. However, multiple studies have shown that when children are spanked they are more likely to act out or have mental health issues in the future (Gershoff, 2002; Gershoff et al., 2010).

It is important to note that reinforcement and punishment are not simply opposites. The use of positive reinforcement in changing behavior is almost always more effective than using punishment. This is because positive reinforcement makes the person or animal feel better, helping create a positive relationship with the person providing the reinforcement. Types of positive reinforcement that are effective in everyday life include verbal praise or approval, the awarding of status or prestige, and direct financial payment. Punishment, on the other hand, is more likely to create only temporary changes in behavior because it is based on coercion and may create a negative and adversarial relationship with the person providing the punishment. When the person who provides the punishment leaves the situation, the unwanted behavior is likely to return. Today’s Western psychologists and parenting experts favor reinforcement over punishment—they recommend that you catch your child doing something good and reward them for it.

SHAPING

In his operant conditioning experiments, Skinner often used an approach called shaping. Shaping is a technique that guides an animal or person toward a complex behavior, by rewarding successive approximations of the behavior. Shaping is often needed to encourage the development of complex behaviors, because it is extremely unlikely that any organism will display anything but the simplest of behaviors spontaneously. In shaping, behaviors are broken down into many small, achievable steps. For each step, the animal or person is reinforced if they exhibit the behavior. Skinner used shaping to teach pigeons to enact many unusual and entertaining behaviors, such as turning in circles, walking in figure eights, and even playing ping pong; shaping is commonly used by animal trainers today.

Link to Learning

Watch this brief video of Skinner’s pigeons playing ping pong to learn more.

Watch this video clip of veterinarian Dr. Sophia Yin shaping a dog’s behavior using the steps outlined above to learn more.

It’s easy to see how shaping is effective in teaching behaviors to animals, but how does shaping work with humans? Let’s consider a common childhood milestone—learning to use the toilet. Few toddlers can get the hang of using a toilet all by themselves. Instead, most caregivers break the learning down into simple steps. For example, you might first reward your child for sitting on the potty with their clothes on. Then after a while you only reward your child if they sit on the potty without a diaper. The next step might be to reward your child only if they actually use the potty. Before long (hopefully) your child can remove their own clothing and use the potty or toilet when they need to go. Once a behavior like this is reliably established—we rarely have to provide a reinforcement to maintain it.

Primary and Secondary Reinforcers

For animals, food is typically used as a reinforcer in operant conditioning studies; and to incentivize them further, most researchers stop feeding them prior to a study. What would be a good reinforcer for humans? For Jerome, it was the promise of some money if they cleaned their room. But what if you gave ice cream or candy instead? In this case, you would be using food, which is known as a primary reinforcer. Primary reinforcers relate to innate behaviors, like eating, drinking, sexual activity, temperature regulation, feeling pleasure, or relief from pain. For example, on a hot day, you might be motivated to clean your room, if you know you can have an ice-cold drink when you finish.

On the other hand, secondary reinforcers have no innate value and only have reinforcing qualities when linked with a primary reinforcer. For example, money is only worth something when you can use it to buy other things—either things that satisfy basic needs (food, water, shelter—all primary reinforcers) or other secondary reinforcers. If you were on a remote island in the middle of the Pacific Ocean and you had stacks of money, the money would not be useful if you could not spend it. Some settings use tokens as secondary reinforcers, which can then be traded in for rewards and prizes. Token economies have been found to be very effective at modifying behavior in a variety of settings such as schools, prisons, and hospitals for the mentally ill. For example, Adibsereshki and Abkenar (2015) found that using tokens to reward appropriate classroom behaviors improved the science test scores in a group of eighth grade girls with intellectual disabilities.

Everyday Connection

Behavior Modification in Children

Parents and teachers often use behavior modification to change a child’s behavior. Behavior modification uses the principles of operant conditioning to accomplish behavior change so that undesirable behaviors are switched for more socially acceptable ones. Some teachers and parents create a sticker chart, in which several behaviors are listed (Figure 6.7). Sticker charts are a form of token economy. Each time children perform the behavior, they get a sticker, and after a certain number of stickers, they get a prize, or reinforcer. The goal is to increase acceptable behaviors and decrease misbehavior. In the classroom, the teacher often reinforces a wide range of behaviors, from students raising their hands, to walking quietly in the hall, to turning in their homework. At home, parents might create a behavior chart that rewards children for things such as putting away toys, brushing their teeth, and helping with dinner. In order for behavior modification to be effective, the reinforcement should be connected with the behavior and the reinforcement should matter to the child and be done consistently.

Time-out is another common technique used in behavior modification with children. It operates on the principle of negative punishment. When a child demonstrates an undesirable behavior, they are removed from the desirable activity at hand (Figure 6.8). For example, say that Sophia and her brother Mario are playing with building blocks. Sophia throws some blocks at her brother, so you give her a warning that she will have a time-out if she does it again. A few minutes later, she throws more blocks at Mario. You remove Sophia from the room for a few minutes. When she comes back, she plays with the blocks without throwing them.

Reinforcement Schedules

Western psychology suggests that the best way for a person or animal to learn a behavior through operant conditioning is by using positive reinforcement. For example, you might use positive reinforcement to teach your dog to roll over. At first, the dog might randomly happen to rollover after you tell it to, and so you give it a biscuit. If it does it again, it gets another biscuit. If you give your dog a reinforcer each time it displays the desired behavior (rolling over on command), it is called continuous reinforcement. This reinforcement schedule is often the quickest way to teach a new behavior. Providing a reinforcement immediately after the behavior is important for learning the association between the behavior and the reward. However, if you stop reinforcing the behavior—it is possible that it will disappear (extinction).

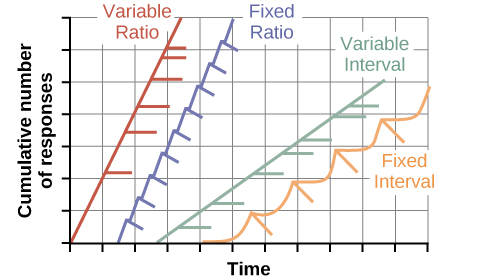

Once a behavior is trained, researchers and trainers often turn to a different reinforcement schedule—partial reinforcement. In partial reinforcement, also referred to as intermittent reinforcement, the person or animal does not get reinforced every time they perform the desired behavior. This ensures that the behavior is maintained but you don’t need to have a constant supply of dog biscuits or other rewards. There are several different types of partial reinforcement schedules (Table 6.2). These schedules are described as either fixed or variable, and as either interval or ratio. Interval means the schedule is based on the time between reinforcements, and ratio means the schedule is based on the number of responses between reinforcements; either one can be fixed (predictable) or variable. For example, a slot machine pays out on a variable schedule (Figure 6.9).

As we can see from Table 6.2 and Figure 6.10 – different reinforcement schedules affect the behavior of the individual quite dramatically. You will probably notice that ratio schedules are more effective at increasing behaviors than interval schedules. For example, a sales person will tend to make more sales if they are paid a small commission each time they make a sale (fixed ratio) rather than being paid weekly or monthly (fixed interval). Variable schedules also tend to increase behaviors and make them more consistent than fixed schedules. Thus, adding some uncertainty to the schedule, keeps the person or animal more on their toes. Notice that the best (and quickest) way to increase behavior with partial reinforcement is to use a variable ratio schedule. Variable ratio schedules require a person or animal to keep producing the behavior to receive a reward – because they cannot predict when it will come. Slot machines pay out using a variable ratio, which is why it is often difficult to stop playing once we start. Understanding reinforcement schedules can also be helpful when caring for children. Variable ratios of reinforcement are especially effective for increasing desirable behaviors such as doing homework, etc. However, they can also serve to increase undesirable behaviors, like temper tantrums. If a child is having a tantrum because they want something you said they can’t have, giving in every now and then (variable ratio), is likely to encourage future tantrums. So, consistency is best.

Table 6.2. Reinforcement Schedules

|

REINFORCEMENT SCHEDULE |

DESCRIPTION |

RESULT |

EXAMPLE |

|---|---|---|---|

|

Fixed interval |

Reinforcement is delivered at predictable time intervals (e.g., every week). |

Moderate response rate with significant pauses after reinforcement |

Shoe salesperson gets paid every week |

|

Variable interval |

Reinforcement is delivered at unpredictable time intervals (e.g., after 5, 7, 10, and 20 minutes). |

Moderate yet steady response rate |

Checking social media |

|

Fixed ratio |

Reinforcement is delivered after a predictable number of responses (e.g., after 2, 4, 6, & 8 responses). |

High response rate with pauses after reinforcement |

Piecework—factory worker getting paid for every x number of items manufactured |

|

Variable ratio |

Reinforcement is delivered after an unpredictable number of responses (e.g., after 1, 4, & 9 responses). |

High and steady response rate |

Gambling—slot machines |

Table 6.3. Comparisons of Classical and Operant Conditioning

|

|

CLASSICAL CONDITIONING |

OPERANT CONDITIONING |

|---|---|---|

|

Conditioning |

Neutral stimulus paired with an unconditioned stimulus that elicits a reflex |

The target behavior is followed by a consequence that influences future behavior |

|

Stimulus timing |

The neutral stimulus typically occurs immediately before the response. |

The consequence (reinforcement or punishment) occurs soon after the response. |

What do you think?

Operant conditioning is often used in child-raising, however, there is considerable cultural variations in how reinforcement and punishment are used. Some cultures (like White middle-class Americans) value praise as a way to reinforce behaviors. However, other cultures (such as in South Asia and China) fear that too much praise can spoil a child (Raj & Raval, 2013). In some cultures, spanking children is relatively common, and in other countries it has been banned (Pace et al., 2019). What are your childhood experiences of operant conditioning and how have they influenced your views on using operant conditioning to shape children’s behavior?

Cognition and Latent Learning

Watson and Skinner were behaviorists who believed that all learning could be explained by conditioning (see Table 6.3 for a reminder about the differences between the two types of conditioning). But some kinds of learning are very difficult to explain using only conditioning. One example of this is when we suddenly find the solution to a problem, as if the idea just popped into our head. This cognitive process is known as insight, the sudden understanding of a solution to a problem. The German psychologist, Wolfgang Köhler (1925), found that chimpanzees who were given a problem that was not easy for them to solve also often had flashes of insight. When he placed some food in a part of their cage that was too high for them to reach, he found that the chimps first engaged in trial-and-error attempts at solving the problem. However, when these failed they seemed to stop and contemplate for a while. Then, all of a sudden, they would seem to know how to solve the problem, for instance by using a stick to knock the food down or by standing on a chair to reach it.

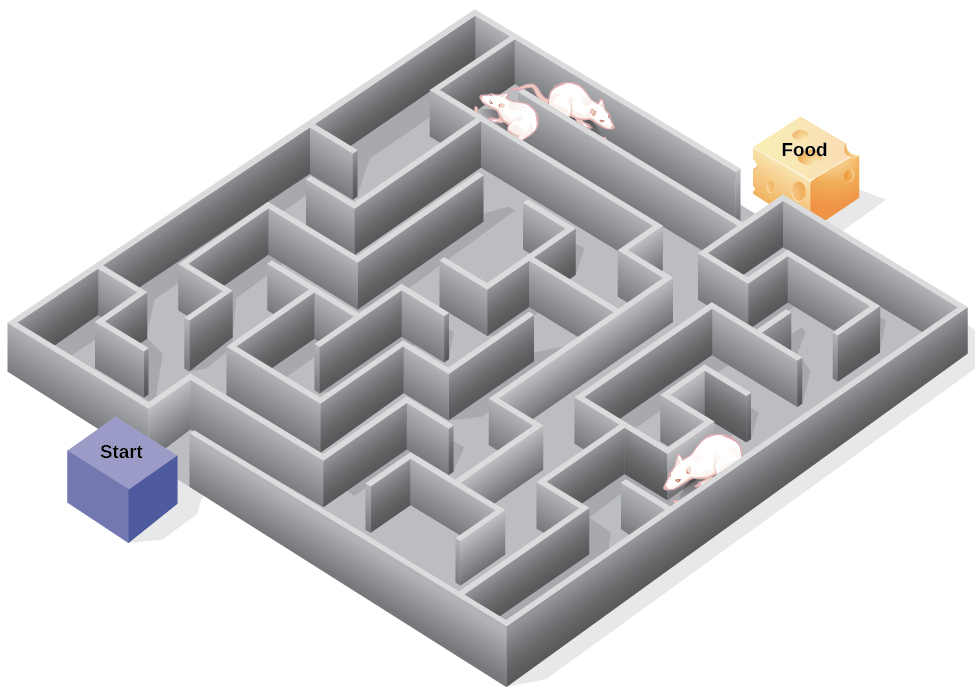

Edward C. Tolman also believed that organisms can learn even if they do not receive immediate reinforcement (Tolman & Honzik, 1930; Tolman et al., 1946). In one of his studies, Tolman studied the behavior of two groups of hungry rats that were placed in a maze (Figure 6.11). The first group always received a reward of food at the end of the maze. The second group only received food on the 11th day of the experimental period. As you might expect, the rats in the first group quickly learned to navigate the maze, but the rats in the second group seemed to wander aimlessly through it. However, on day 11, they too quickly found their way to the end of the maze to eat the food that was placed there. It was clear to Tolman that the rats had created a cognitive (mental) map of the maze in their earlier explorations but had no motivation to go through it quickly, he called this latent learning. Latent learning refers to learning that is not observable until there is a reason to demonstrate it.

Latent learning also occurs in humans. Children may learn by watching the actions of their parents but only demonstrate it at a later date, when the knowledge is needed. For example, suppose that Ravi’s dad drives him to school every day. Ravi has learned the route from his house to his school during the rides with his dad, but he’s never gone there by himself, so he has not had a chance to demonstrate that he’s learned the way. One morning Ravi’s dad has to leave early for a meeting, so he can’t drive Ravi to school. Instead, Ravi rides his bike and follows the same route that his dad would have taken in the car. This demonstrates latent learning. Ravi had learned the route to school, but had no need to demonstrate this knowledge earlier.

Everyday Connection

This Place Is Like a Maze

Have you ever gotten lost in a building and couldn’t find your way back out? While that can be frustrating, you’re not alone. At one time or another we’ve all gotten lost in places like a museum, hospital, or university library. Whenever we go someplace new, we build a mental representation—or cognitive map of the location, as Tolman’s rats built a cognitive map of their maze. However, some buildings are confusing because they include many areas that look alike or have short lines of sight. Because of this, it’s often difficult to predict what’s around a corner or decide whether to turn left or right to get out of a building. Psychologist Laura Carlson (2010) suggests that what we place in our cognitive map can impact our success in navigating through the environment. She suggests that paying attention to specific features upon entering a building, such as a picture on the wall, a fountain, a statue, or an escalator, adds information to our cognitive map that can be used later to help find our way out of the building.

Link to Learning

Watch this video about Carlson’s studies on cognitive maps and navigation in buildings to learn more.