Module 6: Probability and Probability Distributions

Probability Rules (3 of 3)

Probability Rules (3 of 3)

Learning OUTCOMES

- Use conditional probability to identify independent events.

Independence and Conditional Probability

Recall that in the previous module, Relationships in Categorical Data with Intro to Probability, we introduced the idea of the conditional probability of an event.

Here are some examples:

- the probability that a randomly selected female college student is in the Health Science program: P(Health Science | female)

- P(a person is not a drug user given that the person had a positive test result) = P(not a drug user | positive test result)

Now we ask the question, How can we determine if two events are independent?

Example

Identifying Independent Events

Is enrollment in the Health Science program independent of whether a student is female? Or is there a relationship between these two events?

| Arts-Sci | Bus-Econ | Info Tech | Health Science | Graphics Design | Culinary Arts | Row Totals | |

| Female | 4,660 | 435 | 494 | 421 | 105 | 83 | 6,198 |

| Male | 4,334 | 490 | 564 | 223 | 97 | 94 | 5,802 |

| Column Totals | 8,994 | 925 | 1,058 | 644 | 202 | 177 | 12,000 |

To answer this question, we compare the probability that a randomly selected student is a Health Science major with the probability that a randomly selected female student is a Health Science major. If these two probabilities are the same (or very close), we say that the events are independent. In other words, independence means that being female does not affect the likelihood of enrollment in a Health Science program.

To answer this question, we compare:

- the unconditional probability: P(Health Sciences)

- the conditional probability: P(Health Sciences | female)

If these probabilities are equal (or at least close to equal), then we can conclude that enrollment in Health Sciences is independent of being a female. If the probabilities are substantially different, then we say the variables are dependent.

[latex]P(\text{Health Science}) = \frac{644}{12,000} \approx 0.054(\text{marginal probability; an unconditional probability})[/latex]

[latex]P(\text{Health Science | female}) = \frac{421}{6,198} \approx 0.068(\text{conditional probability})[/latex]

Both conditional and unconditional probabilities are small; however, 0.068 is relatively large compared to 0.054. The ratio of the two numbers is 0.068 / 0.054 = 1.25. So the conditional probability is 25% larger than the unconditional probability. It is much more likely that a randomly selected female student is in the Health Science program than that a randomly selected student, without regard for gender, is in the Health Science program. There is a large enough difference to suggest a relationship between being female and being enrolled in the Health Science program, so these events are dependent.

Comment:

To determine if enrollment in the Health Science program is independent of whether a student is female, we can also compare the probability that a student is female with the probability that a Health Science student is female.

[latex]P(\text{female}) = \frac{6,198}{12,000} \approx 0.517[/latex]

[latex]P(\text{female | Health Science}) = \frac{421}{644} \approx 0.654[/latex]

We see again that the probabilities are not equal. Equal probabilities will have a ratio of one. The ratio is [latex]\frac{0.517}{0.654} \approx 0.79[/latex], which is not close to one. It is much more likely that a randomly selected Health Science student is female than that a randomly selected student is female. This is another way to see that these events are dependent.

To summarize:

If P(A | B) = P(A), then the two events A and B are independent.To say two events are independent means that the occurrence of one event makes it neither more nor less probable that the other occurs.

Try It

Try It

In Relationships in Categorical Data with Intro to Probability, we explored marginal, conditional, and joint probabilities. We now develop a useful rule that relates marginal, conditional, and joint probabilities.

Example

A Rule That Relates Joint, Marginal, and Conditional Probabilities

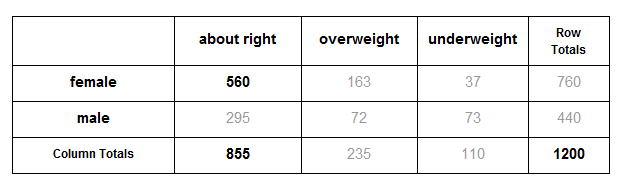

Let’s consider our body image two-way table. Here are three probabilities we calculated earlier:

Marginal probability: [latex]P(\text{about right}) = \frac{855}{1,200}[/latex]

Conditional probability: [latex]P(\text{female | about right}) = \frac{560}{855}[/latex]

Joint probability: [latex]P(\text{female and about right}) = \frac{560}{1,200}[/latex]

Note that these three probabilities only use three numbers from the table: 560, 855, and 1,200. (We grayed out the rest of the table so we can focus on these three numbers.)

Now observe what happens if we multiply the marginal and conditional probabilities from above.

[latex]P(\text{about right}) \cdot P(\text{female | about right}) = \frac{855}{1200} \cdot \frac{560}{855} = \frac{560}{1200}[/latex]

The result 560 / 1200 is exactly the value we found for the joint probability.

When we write this relationship as an equation, we have an example of a general rule that relates joint, marginal, and conditional probabilities.

[latex]P(\text{about right}) \cdot P(\text{female | about right}) = P(\text{female and about right})[/latex]

[latex]\text{marginal probability} \cdot \text{conditional probability} = \text{joint probability}[/latex]

In words, we could say:

- The joint probability equals the product of the marginal and conditional probabilities

This is a general relationship that is always true. In general, if A and B are two events, then

P(A and B) = P (A) · P(B | A)This rule is always true. It has no conditions. It always works.

When the events are independent, then P (B | A) = P(B). So our rule becomes

P(A and B) = P(A) · P(B)This version of the rule only works when the events are independent. For this reason, some people use this relationship to identify independent events. They reason this way:

If P(A and B) = P (A) ·P(B) is true, then the events are independent.

Comment:

Here we want to remind you that it is sometimes easier to think through probability problems without worrying about rules. This is particularly easy to do when you have a table of data. But if you use a rule, be careful that you check the conditions required for using the rule.

Example

Relating Marginal, Conditional, and Joint Probabilities

What is the probability that a student is both a male and in the Info Tech program?

There are two ways to figure this out:

(1) Just use the table to find the joint probability:

[latex]P(\text{male and Info Tech}) = \frac{564}{12,000} = 0.47[/latex]

(2) Or use the rule:

[latex]P(\text{male and Info Tech}) = P(\text{male}) \cdot P(\text{Info Tech given male}) = \frac{5,802}{12,000} \cdot \frac{564}{5,802} = \frac{564}{12,000} = 0.47[/latex]

Try It

All of the examples of independent events that we have encountered thus far have involved two-way tables. The next example illustrates how this concept can be used in another context.

Example

A Coin Experiment

Consider the following simple experiment. You and a friend each take out a coin and flip it. What is the probability that both coins come up heads?

Let’s start by listing what we know. There are two events, each with probability ½.

- P(your coin comes up heads) = ½

- P(your friend’s coin comes up heads) = ½

We also know that these two events are independent, since the probability of getting heads on either coin is in no way affected by the result of the other coin toss.

We are therefore justified in simply multiplying the individual probabilities:

(½) (½) = ¼

Conclusion: There is a 1 in 4 chance that both coins will come up heads.

If we extended this experiment to three friends, then we would have three independent events. Again we would multiply the individual probabilities:

(½) (½) (½) = ⅛

Conclusion: There is a 1 in 8 chance that all three coins will come up heads.

- Concepts in Statistics. Provided by: Open Learning Initiative. Located at: http://oli.cmu.edu. License: CC BY: Attribution